In 2012, several media headlines touted the narrative of what seemed like a groundbreaking study, which claimed that techniques used to make people think analytically can make them less religious. Half a decade on, however, the study’s findings are being brought into question with multiple papers that suggest its underlying methods were flawed — and, what’s more, the authors agree.

The original study dominated the headlines for a number of reasons. Firstly, its findings played into already-held beliefs in the public domain about religion and analytical thinking — namely, the assumption that religious people think less deeply. Additionally, there was the study’s ‘wow’ factor — the ability for psychologists to alter one’s deep-rooted beliefs, which is probably what also got the study accepted in the high-profile journal Science. But new research suggests that techniques used by the authors may be ineffective in altering how analytically one is thinking.

The first experiment in the original study — by Will Gervais and Ara Norenzayan, who were then based at the University of British Columbia in Vancouver, Canada — found a (weak) negative correlation between religious belief and analytical thinking. In it, the authors asked people questions that would usually appear on a cognitive reflection test, a psychological task used to measure a person’s tendency to overlook an incorrect gut response and look more deeply for the right answer. People who tend to give the gut answer are likely to be more religious, while the people who give the correct answer believe in God the least, the authors found. Interestingly, even though the effect is tiny, it has been successfully replicated by other research groups.

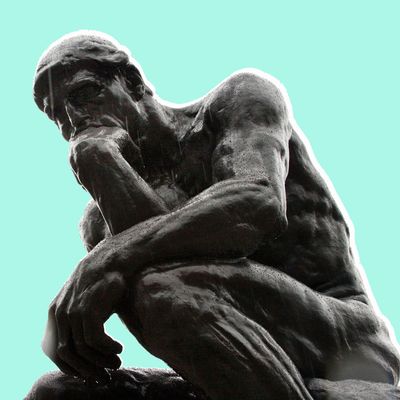

In the next four experiments, Gervais and Norenzayan went further to attempt manipulating people’s level of religious belief by altering how analytically they were thinking. In one experiment, they made participants look at sculptor Auguste Rodin’s The Thinker (to foster analytical thinking) and the Discobolus. The study found a large difference between the two groups, but a repeat study published last month in PLOS ONE with more than 900 participants in four different settings (compared to 57 in the original paper) detected no difference. Additionally, another 2015 study found that peering at images of The Thinker doesn’t make people think more analytically anyway.

“We took out a big hammer for a small nail,” says Robert Calin-Jageman, last author of the recent PLOS ONE replication study, from Dominican University in River Forest, Illinois. “I certainly wouldn’t have batted an eye in believing the study as gospel when it first came out,” he adds. But he and his co-authors didn’t need to replicate the study’s other experiments, since techniques used in these have now already debunked by other papers, Calin-Jageman explained.

Gervais and Norenzayan’s next two experiments involved measuring religious belief in groups of people completing a word-problem task with analytical or neutral words. Again, they found people using analytical words to report lower levels of religious belief. Although this experiment has not been explicitly replicated, its methods have also been found to be flawed. According to Calin-Jageman, the same study that looked at The Thinker experiment also found the word-problem task to be ineffective in fostering analytical thinking. But Calin-Jageman says he’s not sure why. “My thinking is that the techniques never could have worked — that the tendency to think analytically can’t be dramatically boosted by such subtle treatments,” he says.

Finally, the study’s remaining experiment reported lower levels of analytical thinking (and religious belief) in participants reading text in a hard-to-read font rather than an ordinary font. But Calin-Jageman points out that a large 2015 analysis by researchers who invented the font effect found it to be ineffective in triggering analytical thinking.

***

Calin-Jageman praised Gervais and Norenzayan for their openness with their data, transparency, and cooperation throughout the replication process, noting that 2012 was a different time when people weren’t quite connecting the dots in terms of statistical validity. Notably, Gervais has also authored a blog post admitting mistakes and commending the replication paper’s authors. In the post, Gervais notes that the original study was conducted between 2009 and 2010, and his “methodological awakening” started around 2012 and continues today.

In recent years, psychology’s replication crisis has uncovered a number of problems such as P-hacking, hypothesizing after results are known (otherwise known as “HARKing”), cherry-picking results, and underreporting unfavorable outcomes. Gervais noted in an interview that partly why so many problems are being found in social psychology is because the field’s experiments are easier to replicate. “I’m optimistic about the direction our field is headed,” he writes in his blog, “though occasionally dismayed to see studies at least as weak as mine published in 2016.” In hindsight, Gervais admits, his paper had too few participants — the future should see fewer papers with larger samples and more rigorous methodology, he says.

Despite the original study’s methods’ falling apart, the replication paper was rejected by two high-profile journals: Science (which published the original study) and Psychological Science. Actively rejecting papers with negative results — known as publication bias — is another long-standing problem plaguing scientific literature where journals preferably publish more positive findings that ultimately dominate headlines. Clearly “science itself is self-correcting, but Science the journal isn’t,” Calin-Jageman says.

Calin-Jageman thinks the solution is a phenomenon known as “results-free” peer review where journals accept or reject papers without seeing any results — a process that a few journals are currently exploring. “Filtering out data through this positive-negative lens, we’ll never get the full story,” he says.

For Calin-Jageman, the original study’s results are unusual. “They either got very lucky or they pushed their data to some extent because it’s so consistent that, knowing what we know now, it shouldn’t have come out so well,” he says. Nevertheless, “they followed all the rules that at the time made sense,” he notes, but in the last six years the rules have changed. “We’ve realized that the way we were doing things is really misleading.”